lens

Outer Reaches

Extending human touch beyond the fingertips

Illustration © 2021 Cynthia Turner

Illustration © 2021 Cynthia TurnerImagine if our sense of touch was no longer limited by physical location. An engineer on Earth could have the sensation of holding and manipulating a tool in space; a medical student could experience the pressure of a surgeon’s grip on a scalpel cutting through skin; or a physician could remotely control a robotic arm and actually feel for swollen glands in a patient’s neck. These are some of the uses of the NeuroReality avatar system that the Human Fusions Institute team has contemplated.

One day last August, Case Western Reserve graduate student Luis Mesias put on a virtual reality (VR) headset and a glove equipped with electrodes—and reached into the future.

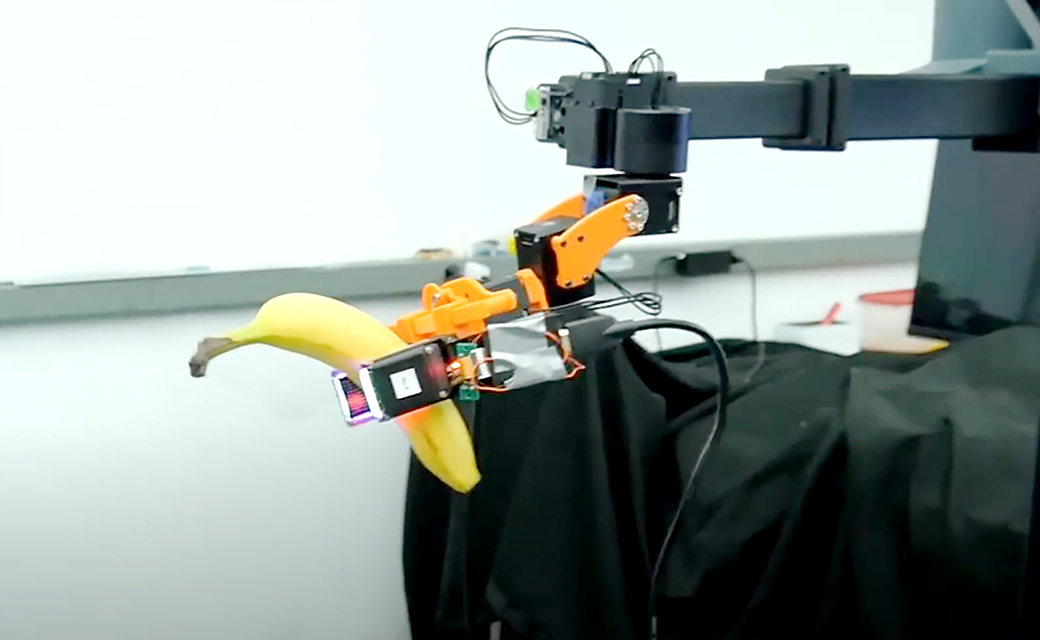

From his Cleveland apartment, Mesias used his gloved hand to successfully control a robotic arm 2,300 miles away in Los Angeles, manipulating a toothpaste tube, a Pringles can and a banana. Even more striking: He experienced a sense of touch.

Dustin Tyler

"He could actually feel the amount of pressure on the object in Los Angeles in his fingertips in Cleveland," said Dustin Tyler, PhD (GRS ’99, biomedical engineering), the Kent H. Smith II Professor of Biomedical Engineering and the founding director for an institute based at CWRU to extend the tactile reach of humans beyond their physical location.

Launched four years ago, the Human Fusions Institute includes neuroscientists, engineers, sociologists, clinicians, ethicists, roboticists and phenomenologists.

They come from six colleges and universities including University of California, Los Angeles, home to the robotic arm Mesias controlled in an unprecedented test of what Tyler calls the “NeuroReality” platform. The institute is supported in part by grants and a portion of a $20 million strategic initiative fund for biomedical engineering that the late Robert Aiken (CIT ’52) and his wife, Brenda, committed to in 2017.

The Cleveland-Los Angeles NeuroReality test was one more milestone in Tyler’s work. His team previously gave individuals who had lost a hand to amputation a sense of touch with a specialized prosthetic linked to their brains and nervous systems. It was an emotional experience for the recipients, and revelatory for Tyler. "Their language changes entirely," he said. "They start to describe 'their hand' doing tasks."

Standing in Cleveland, Case Western Reserve graduate student Luis Mesias (pictured above) used a virtual reality headset and a specialized glove to control this robotic arm in Los Angeles— and both feel and move the banana in the gripper.

The August trial was an extension of this effort, using the same principles of neural interfacing to extend the range of an individual’s sense of touch. "That prosthetic is a robot, and I can place it anywhere in the world and still connect to it," Tyler said. "And it will still be 'their hand.'"

Tyler has focused particularly on the health care application, which took on added significance during the pandemic amid concerns about frequent staff visits to hospital rooms of patients with COVID-19 or the need for better telemedicine options.

"If you talk to any clinician, they'll say a big part of the work is the laying of hands—the feeling, the touch," Tyler said.

Remote care also could help democratize medicine, he said, allowing doctors and nurses anywhere in the world to serve patients in hard-to-reach, underserved locations.

Possible military applications also show promise, notably the potential to use remote sensory systems to help defuse explosives without loss of life. "With this technology, [soldiers] could actually feel like they’re there," Tyler said. The team also is exploring ideas for people performing work on the International Space Station without leaving Earth, he said.

And beyond replicating human abilities, he noted, the platform could eventually extend them. Imagine, for example, using a remote system like this to control a massive construction crane with just a hand and glove. "I could pick up a block and move it. It could be a 10-ton block, but I’d feel like I’m just picking up Legos and putting them somewhere on the building," Tyler said.

As Tyler works to blend human interaction and robotic systems, the research leads to deep questions about what it means to be human and how far we should stretch our natural limits.

"Where are the boundaries if I can expand my presence to anywhere?" he asked. "Philosophically, there's a lot of interesting questions that happen there."

And that’s why he’s brought in an interdisciplinary group of experts to help researchers consider these issues from the get-go— and not after the technology is in use.

Tyler believes the institute will be a pioneer and leader in laying the foundation for the future of the human-technology relationship, but he also knows the race is on.

Human Fusions was recently named one of 38 semifinalist teams in a $10 million global avatar competition hosted by XPRIZE, a nonprofit that designs and operates competitions to catalyze innovations to benefit humanity. The ANA Avatar XPRIZE grand prize will be awarded in 2022 to the team whose system enables an operator to "see, hear, and interact within a remote environment in a manner that feels as if they are truly there."

"Most of the other competitors have really cool robotics that look very humanoid," Tyler said. His team focused on showing how humans could engage with remote environs.

"I think we probably have the most advanced neural interface [technology]," he said. Their entry includes work from recent trials in which his team controlled a remote robotic hand to complete a series of tasks alone or in tandem with humans, including such actions as clinking plastic champagne flutes.

"The ANA Avatar XPRIZE competition isn’t the end point for us, but it is the embodiment of what we’re trying to do. That is, to think about and develop the symbiotic relationship between the machine and the human."

—Dustin Tyler

A Team of Collaborators

CWRU community members working on the Human Fusions technology include Michael Fu, PhD, the Timothy E. and Allison L. Schroeder Assistant Professor of Computer and Data Sciences; Emily Graczyk, PhD, who in July is slated to become an assistant professor in biomedical engineering; and Pan Li, PhD, an associate professor in electrical, computer, and systems engineering. Partner institutions are: University of California, Los Angeles; Cleveland State University; Carnegie Mellon University, University of Wyoming and Tuskegee University.

For more information, visit humanfusions.org.