Date Approved: September 16, 2011

Effective Date: September 16, 2011

Responsible Official: Chief Information Security Officer

Responsible Office: [U]Tech Information Security Office

Revision History: Version 1.0; dated September 12, 2011

Related legislation and University policies:

- Policy I-1 Acceptable Use of Information Technology (AUP)

- The CWRU Information Technology Strategic Plan 2016

Review Period: 5 Years

Date of Last Review: September 12, 2011

Related to: Faculty, Staff

Purpose

A standard policy for management of information security related risk is defined, and baseline terminology set forth for security planning and compliance efforts. The objective of risk management activities include:

- Establishment of a risk-aware culture through an effective, ongoing dialog about security risks between the system owners and the operations and project teams (including IT)

- Identification and categorization of information and information systems

- Enable all team members to participate in identification of risks through the use of standard terminology and qualitative probability and impact definitions.

- Ensure system owners are knowledgeable of the Top 20% or Top 5 risks to the information systems, and take appropriate steps in management of risks and through security plans

- Ensure appropriate attention is focused on issues and concerns

- Clarify the role of the Information Security Office in the management of enterprise-wide information security risks.

Scope

This standard applies to all university information technology and information management systems to address enterprise and system risk. All information systems (including outsourced IT services) shall undergo some level of assessment of information security risk management. Information systems deemed to be of critical value to Case Western Reserve University, including but not limited to IT systems where formal project management is required, shall undergo formal risk management activities as defined in this Policy.

Cancellation

Not applicable.

Procedure Statement

General

All information systems of critical value to Case Western Reserve University shall undergo some level of assessment of information security risk management. Risk management is a systems lifecycle approach, and not a single point of time evaluation. It is the responsibility of the system owner to ensure risk to the university is managed adequately, and places the university at no unacceptable risk.

Two principal variants of risk are common to the university environment. These are enterprise risk and system risk. Enterprise security risks are common to all information in the university operations, and the identification and management of these risks are subject to the risk tolerance of the executive management of the university. The Information Security Office is responsible for supporting the Office of the President and Provost, through the VP of University Technology Services/CIO, in management of enterprise security risk, through the deployment of university-wide programs, controls, practices, and policies. System security risks involve specific systems and business processes, which most often have a single department or system owner. The system owner, as defined in Information System Security Plans, is responsible for ensuring adherence to both university-wide risk management activities and system-specific risks.

This procedure outlines the prescribed approach to manage risk in an information security context.

Procedure

The university uses a combination of two standard approaches to management of information security risks: Continuous Risk Management (CRM) and the OCTAVESM Risk Assessment Method. These approaches are designed to be a “supported self-service” program, where with facilitation by the Information Security Office analysts, system owners and their operational and project teams are empowered to identify and manage information security risks to their computing environment.

The OCTAVE method is embedded into the CRM methodology in the identification and analysis phases of CRM, commonly referred to as a risk assessment.

Continuous Risk Management (CRM)

The CRM method is an iterative and qualitative risk management framework that is adaptable to the dynamic environment of university research, education, and supporting IT systems and infrastructure. CRM defines standard terms and processes to be used in managing security risk. CRM was developed by the Software Engineering Institute for software engineering organizations, and the method functions well for information security risk management. Fundamental to the risk management process is the development of a risk inventory for any particular system, and associated actions to address the top named risks, either in-place or planned, by the system owner. This is most commonly implemented in the form of a risk listing as included in an Information Security Plan for the system.

Modified SEI OCTAVESM Risk Assessment Method

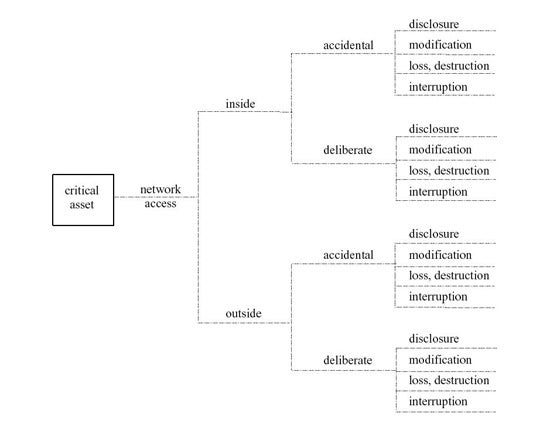

The OCTAVE method, which was developed by the Software Engineering Institute (SEI), defines basic threat profiles that are used in the risk assessment process. The university has modified the method to identify Conditions and Consequences based on OCTAVE threat profiles. These profiles are developed for the critical assets of the system, and are used a visual tool to develop the risk inventory. The OCTAVE threat categories used include:

- humans with network access

- humans with physical access

- system problems

- other problems

Each of these categories are evaluated in a threat tree, as seen in this example for humans with network access. Network access can be from the inside or outside, and accidental or deliberate. All have the potential end results of disclosure, modification, loss/destruction and interruption.

Managing Risk Using CRM

- Risk Inventory: The overall goal of CRM is to develop a Risk Inventory. The inventory is presented as the core of the Information Systems Security Plan.

- The CRM Process Map describes the cycles of risk management. In this manner, the program or project team can complete various cycles by focusing on the top risks, without becoming overburdened by what can become an overwhelming number of risks in the information security arena.

- Identify- Search for and locate risks before they become problems in small risk assessments using the OCTAVE method.

- Analyze- Convert risk data into usable information for determining priorities and making decisions. The generation of the Risk Inventory is completed here, and the Top 20% or Top 5 risks addressed.

- Plan- Translate risk information into planning decisions and mitigation strategies (both present and future), and implement those actions for the Top 5 risks (at a minimum). These are presented in the Information System Security Plan and approval granted by the system owner.

- Track- Monitor risk indicators and mitigation efforts

- Control- Correct risk mitigation plan deviations and decide on future actions

- Communicate and Document- Provide information and feedback to the project on the risk activities, future risks, and emerging risks

Conducting System Risk Assessment

The risk assessment effort is intended to gather all pertinent risks to the system, at the time of the assessment. Participants include system developers, system owners, user representatives, and system managers. The assessment may or may not include determination of risk ranking or mitigation activities. The Information Security Office facilitates these assessments, using known threat conditions based upon their knowledge of the campus computing environment, as well as past and present threat information.

- Pre-Assessment Planning. Gather system use and data flow (actual or proposed), in addition to management and technical teams. Information risks to existing or similar systems, or from previous assessments, should be brought to the assessment.

- Scope: Develop a context model to map information flow and storage. Use the context model to identify key assets of the system. Identify sources information assets and categorize them for Public, Internal Use, Restricted according to the university standard.

- Evaluate OCTAVE Threat Trees for key assets of the system, using the four main OCTAVE threat categories. Note, for smaller projects or systems in the early stage of development, risks may also be assessed using the the Information Security Office Security Design Review questionnaire, team brainstorming sessions, or individual effort, at the discretion of the project manager. Systems at the level where [U]Tech formal project management is required shall have some form of OCTAVE threat assessment performed.

- Generate condition/consequence statements into a risk index, which is added to the security plan.

- Plan and schedule analysis sessions.

Risk Attributes

A risk has a probability of occurrence. If the adverse event has occurred, the probability is 1 and the risk is no longer a risk and has become a problem, and problem management takes over. Therefore, the risk description’s context is used to clearly define the risk conditions and probable adverse events. In the CRM methods, each risk statement consists of two parts:

- Condition: a description of a single current cause for concern, based upon the OCTAVE threat profile. The condition statement encompasses common threat and vulnerability information, which constitute the input to the likelihood of occurrence.

- Consequence: an initial description of the perceived impact of the cause for concern. The consequence should also account for intermediate and long term impacts. Additional mission success criteria can be added to clarify the severity of the impact.

As the result of the risk analysis phase of CRM, each risk will have defined three qualitative attributes:

- Probability

- low- deemed improbable, less than 20% chance of occurrence

- medium- probable

- high- very likely, has been experienced in other similar business/research areas, more than 70% chance of occurrence

- Impact (can be based on performance, safety, cost, schedule, in addition to the

- low- marginal impact should the event occur (e.g. disclosure of public or internal information)

- medium- critical (e.g. disclosure of restricted information)

- high- catastrophic (e.g. total loss of data assets or loss of ability to achieve mission, no alternatives exist)

- Timeframe (for reaction when a risk is realized)

- long term- action needs to take place beyond 12 months

- mid term- action needs to take place between 2 and 12 months

- near term- action needs to take place within the next 2 months

Example Risk Statement

"Given the condition, there is a possibility that consequence will occur."

| Condition | Users tend to leave their computers unattended while logged into applications where restricted data are managed, and physical access to the general public is permitted. |

|---|---|

| Consequence | Using physical access to the unattended computer, an outsider (unauthorized) may deliberately access restricted information with the result of disclosure, modification, or destruction of the data. |

Planning Stage Risk Action Standards

The purpose or risk planning is to refine the knowledge of risks identified, and to address the most important risks first. Efficiency is necessary to minimize cost and schedule impact to information systems and projects. Since CRM is a cyclical process, lower priority risks will “rise to the top” as the most important are addressed in early cycles. Once risks are prioritized, action plans are created to address the Top 20% or Top 5 risks. There are 4 categories of risk action:

- Watch- monitor changes to probability and impact conditions, but action (and any possible cost expenditure) is delayed. This is generally performed when it is suspected that low impact or probability will increase.

- Accept- the project and management have accepted that this risk is tolerable to the mission of the system. Risks with probability and impact above medium that are accepted must have a contingency plan to address the impact.

- Research- inadequate information exists at the time of the analysis, so the project team needs to learn more about the risk. Risk categorized under research need timeframes defined when the research is due to be complete.

- Mitigate- taking action by allocation of resources to implement controls or process changes. The Discrete actions are defined in the mitigation plan, with the following objectives:

- lower probability of occurrence

- lower or soften impact

- extend the time frame for reaction

The risk index, with risk actions defined, is delivered as part of the Information Systems Security Plan. The project assessment team must also continue to understand that the taking of calculated risks is what permits innovations, keeping in mind that the purpose of risk management activities is to address plans to manage unacceptable risks to the organization.

Tracking Stage Standards

The tracking of risks is performed as part of the Information Systems management function, and as such, it is best achieved by periodic updates to the Information Systems Security Plan. The system owner is responsible for ensuring that risk actions have taken place, determination of effectiveness of mitigation activities, and ensuring a new risk assessment cycle is conducted upon any significant change to business practices or system implementation. Risk status is to be reported at monthly project status meetings, or in the [U]Tech project report.

Control Stage Standards

Decisions shall be made by the project manager during the project status meetings to close risks, continue to research, mitigate or watch risks, re-plan or re-focus actions or activities, or invoke contingency plans. The Top 5 (or top 20%) risk elements that have enterprise impact may only be closed at the concurrence of the system owner. This is also the time when the project manager authorizes and allocates resources toward risks.

Communication and Documentation Stage Standards

The risk index is reported as a part of the Information Systems Security Plan. Project status meetings should use the standard Risk Index table. For systems subject to FISMA, long term risk mitigation activities (those that require implementation time frames longer than the initial systems development cycle) will have a published Plan of Actions and Milestones (POA&M) for top risks only.

Risk Acceptance and System Authorization Stage Standards

The individuals (typically the System Owner) with the appropriate level of authority to accept responsibility for system risk denote their acceptance of the risk action plan by approval of the Information Systems Security Plan.

Responsibility

Chief Information Security Officer (CISO): Ensure risk management process is established and maintained. Define the acceptable risk thresholds for the enterprise through ITSPAC Governance processes. Manage enterprise (system-wide) security risks.

Information Security Staff: Monitor security risks on a continual basis and regularly update the procedural controls based on changing security threat scenarios. Define acceptable risk threshold recommendations to senior management.

System Owners: Assure that risk management activities are conducted during the system development lifecycle, and that local system risk decisions do not negatively impact the university security risk tolerance. Ensure compliance through the establishment of an information systems security plan.

Definitions

Risk: The combination of the probability that an undesired event will occur which will prevent the organization from achieving a mission or business function (such as a compromise of security) and the consequences or impact of the; risk generally accompanies opportunity.

Risk statement: A combination of condition (threat source and vulnerability) and consequence (impact) that describes a risk.

Federal Information Security Management Act (FISMA) 2003- Select research systems are subject, via contractual obligations, to comply with the provisions of the Act. Security requirements for risk management under FISMA are delineated by a sponsoring agency security policy document, and by NIST Special Publication 800 series documents.

Acceptable Risk: Risk that is understood and agreed to by the system owner, program, or project, and the Chief Information Security Officer/ITSPAC Governance Committee.

System Owner: The university authority for an business process that is supported by a information system, typically the primary internal customer of the system. For example, the Chief Financial Officer is the system owner of the financial system; the Registrar is the owner of the student information system.

Plan of Action and Milestones (POA&M)

References

Continuous Risk Management Guidebook, Software Engineering Institute, Pittsburgh, PA http://www.sei.cmu.edu/risk/

OCTAVESM Threat Modeling

Managing Information Security Risks an OCTAVE Approach, Christopher Alberts and Audrey Dorofee, Addison-Wesley, 2003, Pearson Education, Inc.

Complete Systems Analysis, the Workbook, the Textbook, the Answers, James Robertson and Suzanne Robertson, 1998, Dorset House Publishers.

Federal Information Security Management Act (FISMA) of 2003.

NIST Special Publication 800-39, Managing Information Security Risk

Standards Review Cycle

This procedure will be reviewed every three years on the anniversary of the policy effective date, at a minimum. The standard may be reviewed and changed as needed to adapt to compliance and business needs.